Why Most Technical SEO Audits Are Useless

Run any site through Screaming Frog, Sitebulb, or Semrush. You’ll get hundreds of “issues.” Missing alt text. Duplicate meta descriptions. Pages with low word count. Multiple H1 tags.

Now ask: Which of these actually affects rankings?

The uncomfortable truth is that most don’t. Most technical SEO audits are lists of things tools can measure, not lists of things that matter. Google’s Martin Splitt said it directly in a November 2025 Search Central video: “Finding technical issues is just half of an audit.” The other half – prioritizing what actually impacts your site – is where most audits fail completely.

Here’s what’s wrong with standard audits and what actually deserves attention in 2026.

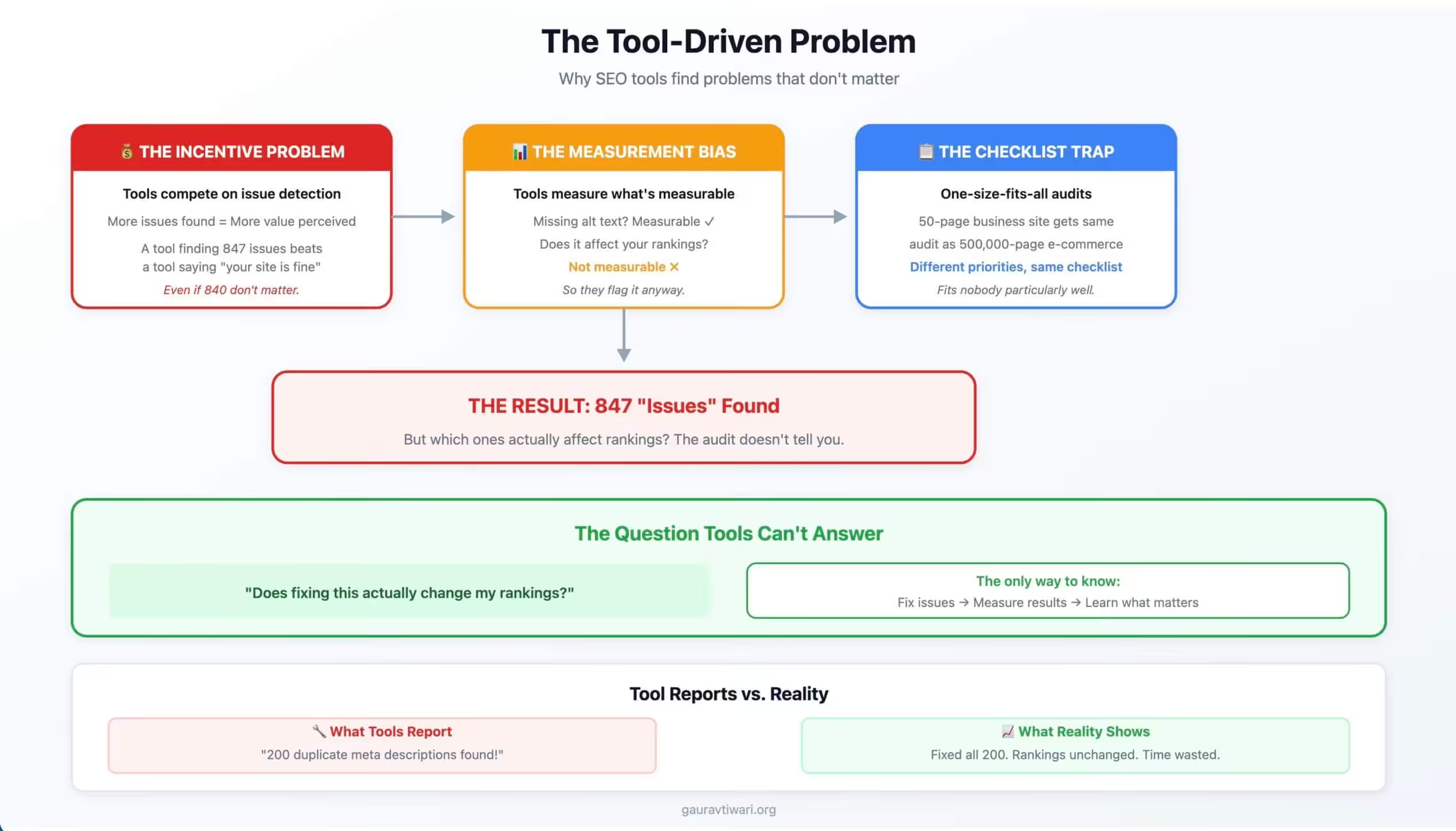

The Tool-Driven Problem

SEO tools are built to find problems. That’s their value proposition. If they found nothing, you wouldn’t pay for them.

The incentive structure:

Tools compete on issue detection. More issues found equals more value perceived. This creates arms race toward finding increasingly marginal “problems.”

A tool that says “your site is fine” loses to a tool that says “I found 847 issues.” Even if 840 of those issues don’t matter.

What tools measure:

Tools measure what’s measurable. Missing alt text is measurable. Whether missing alt text affects your rankings for non-image searches isn’t measurable.

Page load time is measurable. Whether your 2.1-second load time matters for your specific audience isn’t measurable.

The checklist approach:

Tools compare your site against a checklist of “best practices.” But best practices are averages. What matters for a 50-page business site differs from a 500,000-page e-commerce site.

One-size-fits-all audits fit nobody particularly well.

The 2025 AI content flood:

This problem has intensified in 2025. With AI-generated content flooding the web, Google’s crawling systems have become more selective about what they index. Technical issues that might have been overlooked in 2023 now matter more—but the issues that matter are about crawlability and indexation, not the marginal stuff tools flag. The real question isn’t whether you have 200 warnings. It’s whether Google can efficiently find and index your important pages.

Issues That Sound Serious But Aren’t

Let’s examine common audit findings that rarely matter.

Missing alt text on decorative images:

Alt text matters for accessibility and image search. But decorative images, icons, and spacers? Adding alt text to these adds noise, not value.

Tools flag every image without alt. They can’t distinguish between product photos (need alt) and decorative elements (don’t need alt).

Duplicate meta descriptions:

Google often rewrites meta descriptions anyway. Duplicate descriptions on similar pages rarely hurt rankings. They might not help click-through rates, but they’re not technical emergencies.

Yet audits report them as “errors.”

Low word count pages:

Contact pages don’t need 1,000 words. Neither do product category index pages or location selectors.

Tools flag any page under arbitrary thresholds. They can’t understand that some pages serve purposes other than ranking for informational queries.

Multiple H1 tags:

HTML5 allows multiple H1s in different sectioning elements. Google has said this doesn’t cause problems. Yet audits still flag it as an issue.

The heading structure that matters is logical hierarchy, not H1 quantity.

Orphan pages:

Pages without internal links might matter—if they should rank. But many orphan pages exist intentionally: thank you pages, campaign landing pages, utility pages.

Not every page needs to be in your navigation.

Redirect chains:

A redirect that redirects to another redirect. Tools hate these. In practice, Google follows chains without trouble up to reasonable limits.

Two-hop redirects rarely hurt anything. Fixing them often creates more problems than leaving them.

Issues That Actually Matter

Some audit findings deserve immediate attention.

Indexation problems:

Pages you want ranked aren’t indexed. This is fundamental. Noindex tags, robots.txt blocks, canonical issues that prevent indexation—these directly prevent ranking.

Check Search Console’s coverage reports. Pages not indexed can’t rank.

Critical crawl errors:

Server errors (5xx) on important pages. Blocked resources needed to render content. JavaScript errors that prevent content from loading.

If Googlebot can’t access your content, nothing else matters.

Core Web Vitals failures:

Not marginal issues—genuine failures. LCP over 4 seconds. CLS over 0.25. Pages that are actually broken experiences.

Poor performance at this level affects both rankings and user experience.

Mobile usability problems:

Content that’s genuinely unusable on mobile. Tap targets too small to hit. Text unreadable without zooming. Features that only work on desktop.

Mobile-first indexing means mobile problems are primary problems.

Security issues:

Mixed content on HTTPS pages. Expired SSL certificates. Malware warnings.

Security issues can remove you from search results entirely.

Broken internal links:

Internal links to 404 pages waste crawl budget and create poor user experience. These deserve fixing, especially when they’re on important pages linking to other important pages.

Crawl budget waste on large sites:

This matters more in 2026 than ever. Analysis of enterprise sites shows that installations exceeding 50,000 pages typically waste 40-60% of their allocated crawl budget on low-value URLs. Pagination chains, faceted navigation, duplicate content pathways, and parameter-bloated URLs dilute Googlebot’s focus from pages that actually drive revenue. If only 60-70% of your indexed pages match your sitemap, you have indexing bloat or crawl inefficiency.

Consistency problems:

Google’s John Mueller reiterated in 2025 that consistency remains the most critical technical SEO factor. Inconsistencies in URLs, canonicals, structured data, navigation, and content confuse Google’s indexing systems. These inconsistencies split ranking signals across multiple versions of the same page, cause crawl budget waste, confuse indexing decisions, and lead Google to ignore canonical tags entirely.

JavaScript rendering failures:

With more sites using JavaScript frameworks, rendering issues prevent Google from fully accessing content. Google’s Web Rendering Service employs a 30-day caching system for JavaScript and CSS resources. If Googlebot can’t render your JavaScript, content may be delayed or missed entirely during indexing. This is a genuine technical problem, not a theoretical concern.

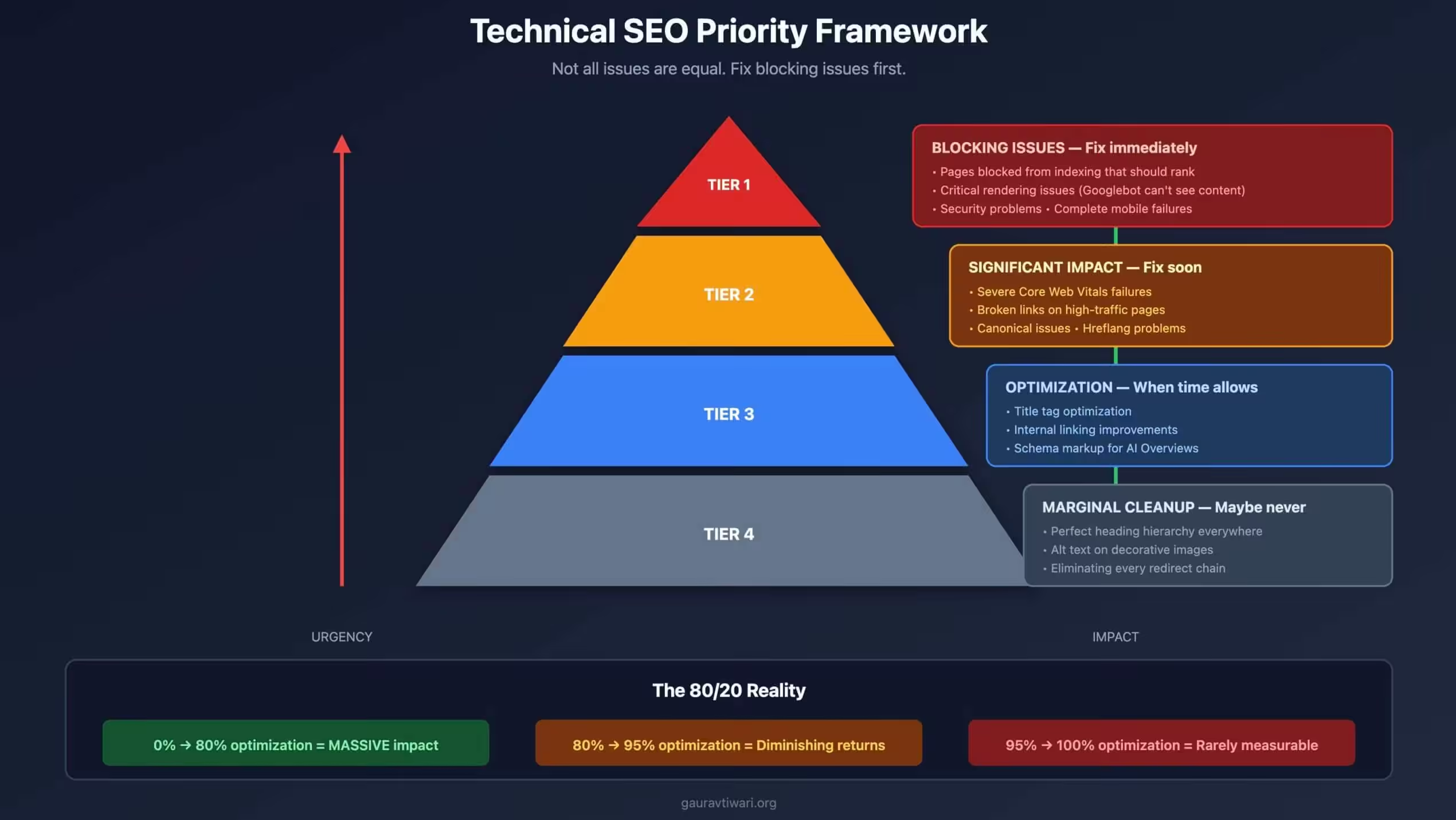

The Prioritization Framework

Not all issues are equal. Here’s how to prioritize:

Tier 1: Blocking issues

Problems that prevent indexation or access. Fix these first because nothing else matters until they’re resolved.

- Pages blocked from indexing that should rank

- Critical rendering issues

- Security problems

- Complete mobile failures

Tier 2: Significant impact issues

Problems that measurably affect performance or user experience.

- Severe Core Web Vitals failures

- Broken links on high-traffic pages

- Canonical problems causing duplicate content issues

- Missing or incorrect hreflang on international sites

Tier 3: Optimization opportunities

Not problems, but improvements that might help.

- Title tag optimization

- Meta description improvements

- Internal linking enhancements

- Schema markup additions (increasingly important for AI Overviews in 2026)

Tier 4: Marginal cleanup

Things that are technically imperfect but don’t affect outcomes.

- Perfect heading hierarchy on every page

- Alt text on every decorative image

- Elimination of every redirect chain

Many audits present all issues as equally urgent. They’re not.

What a Useful Audit Looks Like

A valuable technical SEO audit differs from a tool export. It requires judgment, not just scanning. It demands prioritization, not just detection.

Starts with context:

What is this site trying to accomplish? What pages matter most? What’s the competitive landscape? Without answering these questions, you can’t distinguish between critical issues and noise.

An e-commerce site with 10,000 products has different priorities than a 20-page service business. A news site publishing 50 articles daily has different concerns than a SaaS company with 100 static pages. A mature site with established authority faces different challenges than a new site trying to get indexed. Cookie-cutter audits miss these distinctions entirely.

Focuses on indexation:

Are the pages that should rank being indexed? Is Google seeing what we think it’s seeing?

Fetch and render checks. Log file analysis. Search Console data. These tell you what’s actually happening.

Identifies real blockers:

What is specifically preventing better performance? Not what could theoretically be better—what’s actually causing problems?

This requires investigation beyond tool exports.

Prioritizes by impact:

Recommendations ranked by potential impact. Not everything flagged needs fixing. Resources go to high-impact issues first.

Provides actionable specifics:

Not “fix your title tags.” Which title tags, what changes specifically, why those pages matter.

Generic advice is worthless. Specific, prioritized actions have value.

The 80/20 of Technical SEO

Most ranking impact comes from a small subset of technical factors. The Pareto principle applies aggressively here.

What matters most:

- Googlebot can access and render your content

- Important pages are indexed

- Pages load reasonably fast

- Mobile experience works

- Internal links distribute authority appropriately

- No critical errors or security issues

Get these right and technical SEO is mostly solved. These six factors account for the vast majority of technical impact. Everything else is optimization at the margins.

What matters less:

Perfect scores on every metric. Zero warnings in any tool. Every theoretical best practice followed.

Diminishing returns kick in quickly. The difference between 90% and 100% technical optimization is rarely measurable in rankings. The difference between 0% and 80% is massive. Focus on the fundamentals before worrying about perfection.

In 2026, with Google’s systems increasingly sophisticated at understanding content quality, getting the basics right matters more than technical perfection. A site with solid fundamentals and exceptional content beats a technically perfect site with mediocre content every time.

Tool Reports vs. Reality

Tools report issues. Reality determines rankings.

The testing approach:

Take issues tools flag. Check if fixing them changed anything. This is the only way to distinguish between theoretical problems and actual problems.

Fixed 200 duplicate meta descriptions. Did rankings change? Fixed all missing alt text. Did anything improve? Eliminated every redirect chain. Did traffic increase?

Often the answer is no. The tools flagged things that don’t affect outcomes. The time spent fixing non-issues could have been spent creating content, building links, or improving actual user experience. This opportunity cost is the hidden price of audit-driven SEO—you optimize for tool scores instead of business outcomes.

Log file reality:

What is Googlebot actually doing on your site? Log files show real crawler behavior, not theoretical concerns. This is where real technical SEO analysis happens.

Is Googlebot getting stuck somewhere? That matters. Is Googlebot visiting your sitemap? Useful to know. Are important pages being crawled regularly? Critical. Log file analysis reveals patterns no tool can detect—pages that theoretically should be crawled but aren’t, resources consuming disproportionate crawl budget, and timing patterns that indicate crawl efficiency problems.

For large sites, log file analysis often reveals that Googlebot spends enormous resources on faceted navigation, paginated archives, or parameter variations while barely touching new content. This is actionable insight. A list of missing alt text is not.

Search Console reality:

What does Google actually report as problematic? Coverage issues, Core Web Vitals warnings, manual actions.

Search Console shows Google’s view. Tools show their own interpretation.

Building a Better Audit Process

If you don’t want to build the audit process yourself, my technical SEO services focus on the issues that move rankings, not the ones that pad a report. I also handle performance optimization since page speed is one of the few technical factors with clear causal impact on rankings. For tracking what matters, Rank Math gives you the data without the noise.

How to create audits that matter. This isn’t about running more tools—it’s about thinking more clearly.

Step 1: Define success

What does this site need to accomplish? What pages need to rank? What’s the current organic performance? What would meaningful improvement look like?

Without goals, you can’t prioritize. An audit that finds 500 issues but doesn’t know which 10 matter is worthless. Start with the business objective, then work backward to technical requirements.

Step 2: Verify indexation

Are target pages indexed? Check Search Console. Site: searches. Actual SERP presence.

Indexation is the foundation. Verify it first.

Step 3: Check crawlability

Can Googlebot access what it needs? Robots.txt, rendering issues, resource blocking.

Use Google’s tools (URL Inspection, Mobile-Friendly Test) not just third-party crawlers.

Step 4: Assess performance

Core Web Vitals from field data. Not just lab tests. Real user experience metrics.

Are there actual performance problems or just tool warnings?

Step 5: Evaluate structure

Is the site organized logically? Does internal linking support important pages? Is technical structure helping or hindering? In 2026, with AI Overviews favoring well-structured, authoritative content, the connection between site architecture and ranking has never been clearer.

Look at how link equity flows through the site. Are important pages getting internal links from high-authority pages? Are orphaned pages that should rank actually orphaned, or are they intentionally isolated? The difference matters.

Step 6: Prioritize findings

Rank issues by impact and effort. What provides the most improvement for reasonable investment? Not everything needs fixing. Some things aren’t broken.

Create a prioritized roadmap, not a random list. The first items should be blocking issues—things preventing indexation or access. The last items should be marginal improvements that probably won’t move the needle. Resources are finite. Spend them on what matters.

Step 7: Validate changes

After implementing fixes, verify improvement. Did the changes accomplish what was intended? This step is almost always skipped, and it’s the most important.

Close the loop. Don’t just fix things—confirm they mattered. If fixing 50 issues didn’t change rankings or traffic, that tells you something important: those issues weren’t the problem. Real technical SEO is iterative. You make changes, measure results, and learn what actually matters for your specific site.

Why Core Updates Expose Real Technical Issues

Here’s something most audits miss: technical issues that don’t matter during stable periods suddenly matter during core updates.

Google’s December 2025 core update demonstrated this pattern. Sites with crawlability issues that had been ranking fine suddenly dropped. The crawl problems always existed—the update just exposed them.

During core updates, Google re-evaluates everything. Broken internal links, redirect chains, orphaned pages, and crawl budget waste that previously flew under the radar become ranking factors. The sites that recovered fastest were those that had addressed real technical blockers, not those that had eliminated every tool warning.

This is the difference between preparation and panic. Sites that fix blocking issues before updates weather them better. Sites that chase perfect tool scores often miss the issues that actually matter.

The Uncomfortable Truth

Most sites would see better results from content improvements than from technical perfection.

Technical SEO creates the foundation. But once the foundation is solid, additional technical work has diminishing returns. In 2026, with AI Overviews changing how Google displays results and entity relationships mattering more than ever, the content layer often determines success more than the technical layer.

A technically perfect site with thin content won’t rank. A technically imperfect site with exceptional content often will. This has always been true, but it’s more true now that Google’s systems better understand content quality and topical authority.

Audits that focus entirely on technical issues miss this. The best use of time might not be fixing warnings—it might be creating better content, building authority, or improving user experience in ways tools can’t measure.

Technical SEO matters. But tool-generated issue lists often don’t. Know the difference. Focus on what prevents Google from accessing and understanding your content. Ignore what tools flag just because they can measure it.

FAQs

Why do SEO tools report so many issues that don’t matter?

Tools compete on issue detection—more issues found equals more perceived value. They measure what’s measurable, not what impacts rankings. They compare against one-size-fits-all checklists that fit nobody well. A tool saying ‘your site is fine’ loses to one finding 847 issues, even if 840 don’t matter.

What technical SEO issues actually affect rankings?

Indexation problems (pages you want ranked aren’t indexed). Critical crawl errors (Googlebot can’t access content). Severe Core Web Vitals failures. Mobile usability problems that make pages unusable. Security issues. Crawl budget waste on large sites. JavaScript rendering failures. Consistency problems across URLs and canonicals. These directly prevent ranking or damage user experience.

Which common audit findings can I usually ignore?

Missing alt text on decorative images. Duplicate meta descriptions. Low word count on pages that aren’t meant to rank. Multiple H1 tags (HTML5 allows this). Orphan pages that exist intentionally. Two-hop redirect chains. Perfect heading hierarchy on every page. These are technically imperfect but rarely affect outcomes.

How should I prioritize technical SEO fixes?

Tier 1: Blocking issues (indexation, access, security). Tier 2: Significant impact (severe CWV failures, broken links on important pages, canonical problems). Tier 3: Optimization opportunities (title tags, internal linking, schema for AI Overviews). Tier 4: Marginal cleanup (decorative alt text, redirect chains). Fix blocking issues first—nothing else matters until they’re resolved.

What should a useful SEO audit include?

Context about site goals and priorities. Indexation verification using Search Console data. Crawlability assessment using Google’s tools. Log file analysis showing actual Googlebot behavior. Real user performance data. Prioritized findings ranked by impact. Specific, actionable recommendations—not generic advice.

Why does crawl budget matter more now?

With AI-generated content flooding the web, Google has become more selective about what it indexes. Enterprise sites (50,000+ pages) typically waste 40-60% of crawl budget on low-value URLs like pagination, faceted navigation, and parameter variations. If Googlebot spends resources on these instead of your important pages, new content gets indexed slower and updated pages rank worse.

Do technical issues matter more during core updates?

Yes. Technical issues that don’t affect rankings during stable periods can suddenly matter during core updates. Crawlability problems, redirect chains, and orphaned pages that flew under the radar become ranking factors when Google re-evaluates everything. Sites that address real technical blockers before updates weather them better than sites chasing perfect tool scores.

Disclaimer: This site is reader‑supported. If you buy through some links, I may earn a small commission at no extra cost to you. I only recommend tools I trust and would use myself. Your support helps keep gauravtiwari.org free and focused on real-world advice. Thanks. — Gaurav Tiwari