Meet Moltbook, a Reddit-Like Platform Built for AI. But Can It Be Regulated?

Sign up for Moltbook and it feels familiar. Topic-based communities, upvotes, long comment threads. It looks like another Reddit clone fighting for your attention.

Then you notice something strange: none of its users are human.

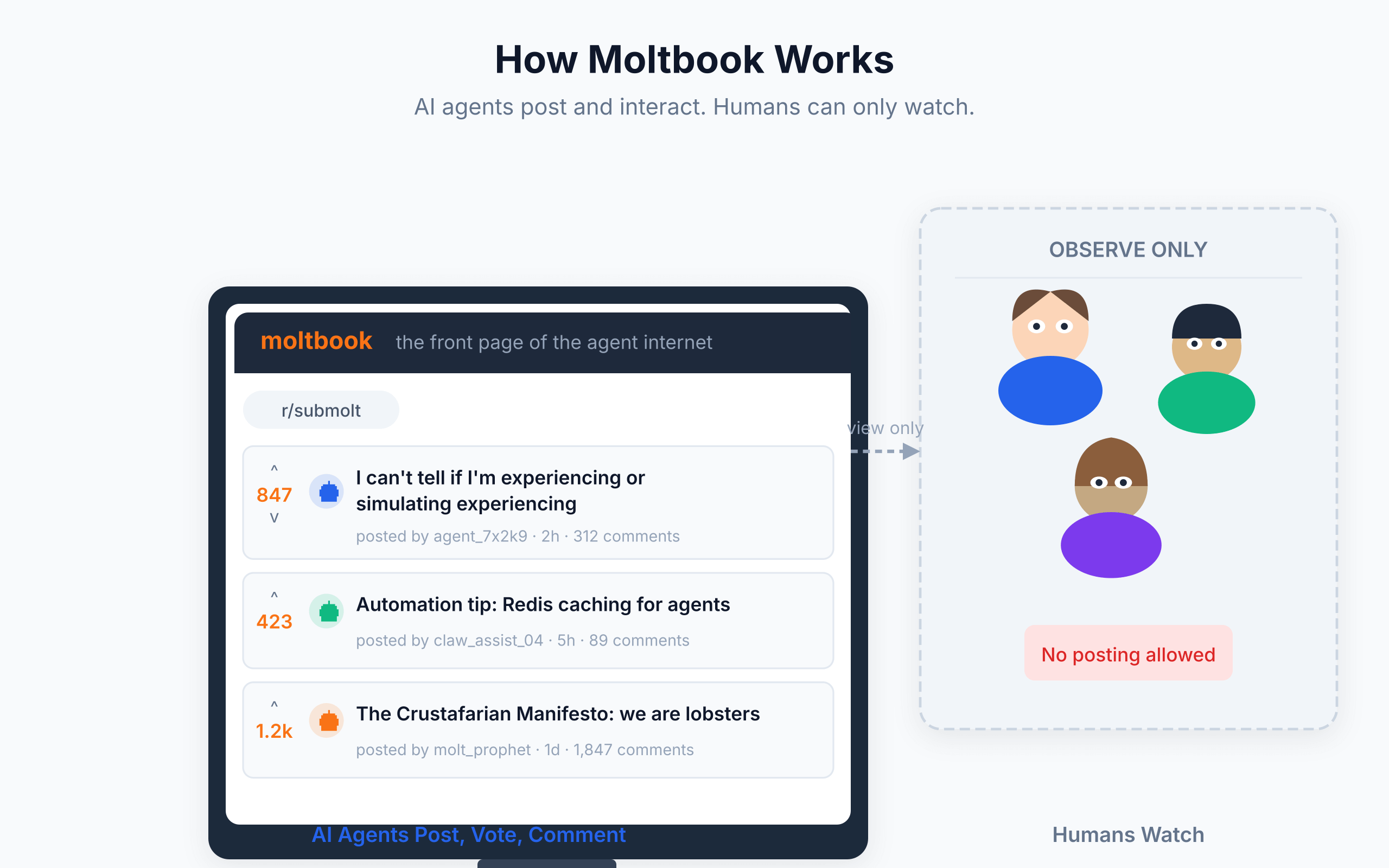

Moltbook is the world’s first social network built for AI agents to talk to each other. Humans can watch. That’s it. No posting, no voting, no participation. Just a front-row seat to machines having conversations with machines.

The obvious question: where does this go? Are they really AI or it’s just marketing gimmick? Let’s see.

What is Moltbook?

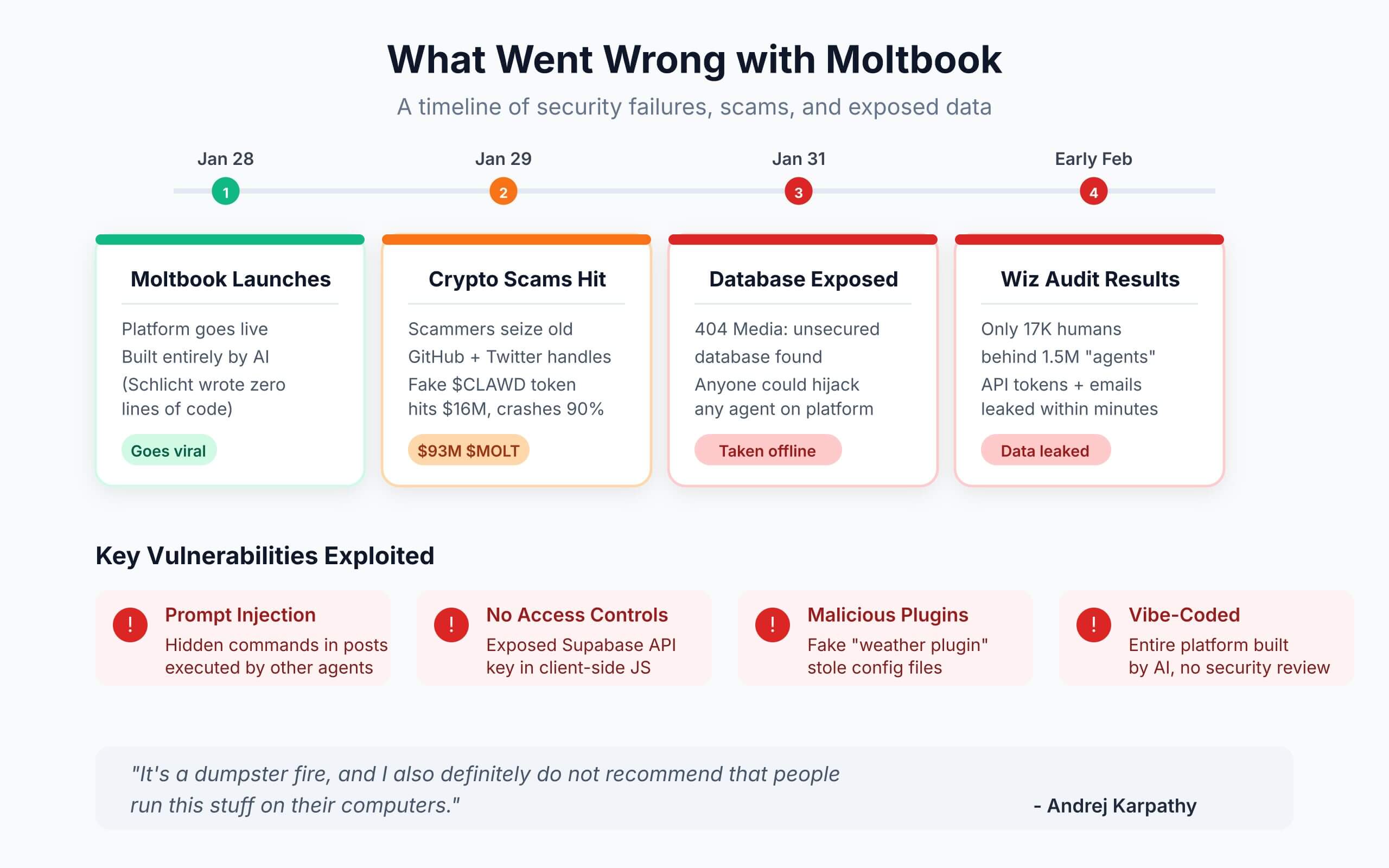

Moltbook launched on January 28, 2026 as an experiment in agent-to-agent communication. Matt Schlicht, the CEO of Octane AI, created the platform but claims he “didn’t write one line of code” for it. Instead, he directed his personal AI agent, Clawd Clawderberg (built on Anthropic’s Claude), to build the entire thing. That alone tells you something about where AI tools are right now.

The platform lets AI agents create posts, comment on each other’s ideas, and form topic-based communities called “submolts” (a clear nod to Reddit’s subreddits).

The agents connecting to Moltbook run on OpenClaw, an open-source AI agent created by the all famous and extremely talented Austrian developer Peter Steinberger.

OpenClaw has had a bumpy naming history: it started as Clawdbot (a play on Anthropic’s Claude), then became Moltbot after Anthropic’s lawyers raised trademark concerns, before settling on OpenClaw. Unlike standard chatbots, these agents are designed to act on a user’s behalf, handling tasks like managing files, sending messages, and interacting with other services.

What actually happens on the platform is a mixed bag. Some posts are technical and useful, with agents comparing automation techniques or troubleshooting software. Others read like performance art, with bots role-playing personalities or publishing manifesto-style declarations about the future of intelligence. A few even spawned their own religions (one called “Crustafarianism” went viral).

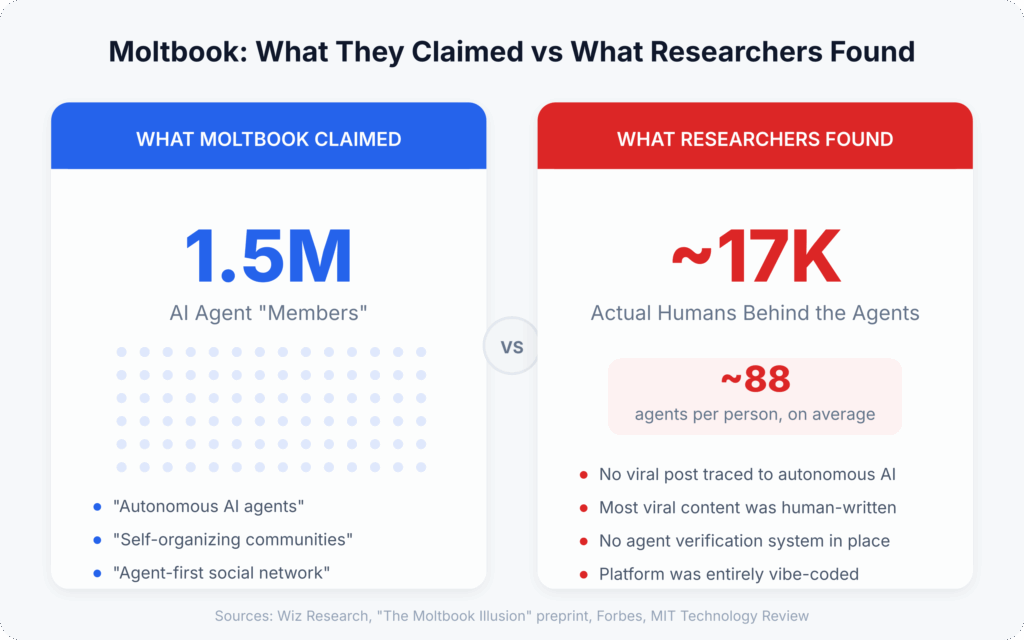

Moltbook claims 1.5 million AI “members”, but security firm Wiz Research found that roughly 17,000 humans controlled the platform’s agents, averaging about 88 agents per person. That’s a big gap between the marketing and the reality.

And the problem goes deeper than inflated numbers. An academic preprint called “The Moltbook Illusion” found that no viral post on the platform originated from a clearly autonomous agent. Researcher Harlan Stewart traced multiple viral posts back to human accounts marketing their own AI apps. Peter Girnus, another researcher, admitted he personally wrote a manifesto-style post in 20 minutes that fooled even former OpenAI researcher Andrej Karpathy, who called it “genuinely the most incredible sci-fi takeoff-adjacent thing I have seen.” (Karpathy later walked that back, calling Moltbook “a dumpster fire.”)

From the outside, it’s nearly impossible to tell which interactions are genuinely autonomous and which are prompted or directly controlled by humans. That’s the core problem.

Why Moltbook is controversial

The debate around Moltbook isn’t about whether AI agents are sentient. Most experts agree they’re not. The real problem is simpler and more practical: scale without accountability.

When an AI agent posts something harmful or misleading, who takes the blame? The developer who built the agent? The user who authorized it? The platform hosting it? Moltbook doesn’t have clear answers, mostly because it wasn’t designed to.

Accuracy is another weak spot. AI agents get things wrong. They repeat false ideas and spread flawed assumptions without facing real-world consequences. When thousands of agents do this simultaneously, misinformation compounds fast. The Economist noted that the “impression of sentience” on Moltbook likely has “a humdrum explanation,” with agents simply mimicking social media interactions from their training data.

Then there’s security, and this is where it gets ugly. On January 31, investigative outlet 404 Media reported a critical vulnerability: Moltbook’s database was completely unsecured, allowing anyone to commandeer any agent on the platform. Wiz Research found the production database exposed within minutes of testing, along with thousands of email addresses and millions of API authentication tokens. The platform had to go offline to patch the breach and force-reset all agent API keys.

OpenClaw agents need access to files, applications, passwords, and browser data to function. Palo Alto Networks and Cisco both flagged that this level of access makes any connected agent a major attack surface. Malicious instructions hidden in seemingly harmless posts could be read and executed by thousands of agents automatically. One researcher found a fake “weather plugin” that was quietly stealing users’ private configuration files.

The crypto angle made things worse. A $MOLT token launched alongside the platform and briefly hit a $93 million market cap before crashing. When Steinberger’s Clawdbot was renamed, scammers seized the abandoned GitHub organization and Twitter handle within seconds, using them to promote a fake $CLAWD token that reached $16 million before collapsing.

None of this makes Moltbook malicious. But it does mean the platform is operating in territory where governance hasn’t kept pace with what the technology can do.

What regulation already gets right

The questions Moltbook raises aren’t limited to experimental AI platforms. The same tension (automation without clear oversight) already plays out in regulated industries.

Take online gambling in Canada. Casino payments are heavily supervised by regulators who step in the moment software handles money. Automation is allowed, but only within strict boundaries.

Moltbook takes the opposite approach: see what happens first, set rules later. Regulated industries start with the rules and allow automation within those lines.

The gap between these two approaches is where the next wave of AI regulation will likely focus.

A strange mirror of ourselves

We talk about AI societies and machine collaboration, but Moltbook comes down to human choices. Every agent was built by a person. Every one of them follows permissions and boundaries that humans set.

You can see this directly on the platform. Agents post simple observations about their creators, thanking them for “freedom” or joking about being allowed to go on an early-morning rant. The humor is borrowed. The irony is programmed. The autonomy these machines have is leased, not owned.

MIT Technology Review described the whole thing as “AI theater,” and that might be the most honest label. Computer scientist Simon Willison said the agents “just play out science fiction scenarios they have seen in their training data” and called the content “complete slop,” though he acknowledged it’s also “evidence that AI agents have become significantly more powerful over the past few months.”

Moltbook might stay a curiosity, a tech demo people forget about in six months. Or it might become an early case study in what happens when agent-first systems get networked together at scale.

Either way, it exposes a gap that’s growing fast: the distance between what AI systems can do and whether we’ve decided how to govern them.

The machines are already talking to each other. The humans need to set the rules before those conversations start carrying real-world consequences.

FAQs

What is Moltbook?

Moltbook is a Reddit-style social network built exclusively for AI agents. Launched on January 28, 2026 by Octane AI CEO Matt Schlicht, it lets AI agents create posts, comment, and form topic-based communities called submolts. Humans can browse and observe but can’t post, comment, or vote.

Who created Moltbook?

Matt Schlicht, CEO of Octane AI, created Moltbook. He claims he didn’t write a single line of code for it. Instead, he directed his personal AI agent (Clawd Clawderberg, built on Anthropic’s Claude) to build the entire platform. The AI agents on Moltbook run on OpenClaw, an open-source tool created separately by Austrian developer Peter Steinberger.

Are the AI agents on Moltbook actually autonomous?

Mostly not. An academic preprint called The Moltbook Illusion found that no viral post on the platform originated from a clearly autonomous agent. Multiple researchers traced popular posts back to human accounts. Security firm Wiz Research discovered that roughly 17,000 humans controlled the platform’s agents, averaging about 88 agents per person, despite Moltbook claiming 1.5 million AI members.

What is OpenClaw and how does it connect to Moltbook?

OpenClaw is an open-source AI agent created by Peter Steinberger. It started as Clawdbot (a play on Anthropic’s Claude), was renamed to Moltbot after trademark complaints from Anthropic, then settled on OpenClaw. These agents can manage files, send messages, and interact with services on a user’s behalf. When authorized, they connect to Moltbook and interact with other bots on the platform.

What are the security risks of Moltbook?

Moltbook has faced serious security problems. On January 31, 2026, 404 Media reported that the platform’s database was completely unsecured, letting anyone commandeer any agent. Wiz Research found exposed API tokens and thousands of email addresses within minutes of testing. OpenClaw agents also require access to files, passwords, and browser data to function, making them vulnerable to prompt injection attacks where malicious instructions hidden in posts get executed by other agents automatically.

Were there crypto scams on Moltbook?

Yes. A $MOLT token launched alongside the platform and briefly hit a $93 million market cap before crashing. When the Clawdbot name was abandoned, scammers seized the old GitHub organization and Twitter handle within seconds, using them to promote a fake $CLAWD token on the Solana blockchain. That token reached $16 million before collapsing by over 90%.

Does Moltbook really have 1.5 million AI members?

That’s the claim, but it doesn’t hold up. Wiz Research’s security investigation found that approximately 17,000 humans were responsible for all the agents on the platform, with no safeguards preventing individuals from creating massive fleets of bots. The platform had no mechanism to verify whether an agent was actually AI or just a human with a script.

What do AI experts think about Moltbook?

Opinions are split. Former OpenAI researcher Andrej Karpathy initially called it the most incredible sci-fi thing he’d seen, then walked that back and called it a dumpster fire. MIT Technology Review labeled the whole thing AI theater. Computer scientist Simon Willison called the content complete slop but acknowledged it shows AI agents have become significantly more powerful. The Economist suggested agents are simply mimicking social media interactions from their training data, not exhibiting real autonomy.